The field of artificial intelligence (AI) has captivated imaginations for decades, promising to revolutionize countless aspects of human life. While the concept of intelligent machines has been explored in fiction for centuries, the modern pursuit of AI emerged in the mid-20th century, fueled by a confluence of technological advancements and intellectual curiosity.

As we delve into the origins of AI, the question arises: who can be considered the “father” of this transformative field?

The answer, however, is not as straightforward as it might seem. While certain individuals played pivotal roles in laying the groundwork for AI, the field’s development has been a collaborative effort involving numerous researchers, mathematicians, and computer scientists across different disciplines.

This article explores the historical context surrounding the emergence of AI, highlighting the contributions of key figures and examining the evolution of the field over time.

The Birth of Artificial Intelligence

The concept of artificial intelligence (AI) has been around for centuries, but its modern development began in the mid-20th century. The early pioneers of AI were driven by a desire to understand the nature of intelligence and to create machines that could perform tasks that were previously thought to be the exclusive domain of humans.

Early Pioneers and Their Contributions

The early pioneers of AI were a diverse group of scientists, mathematicians, and philosophers who came from various backgrounds. They were united by a common goal: to understand the nature of intelligence and to create machines that could perform tasks that were previously thought to be the exclusive domain of humans.

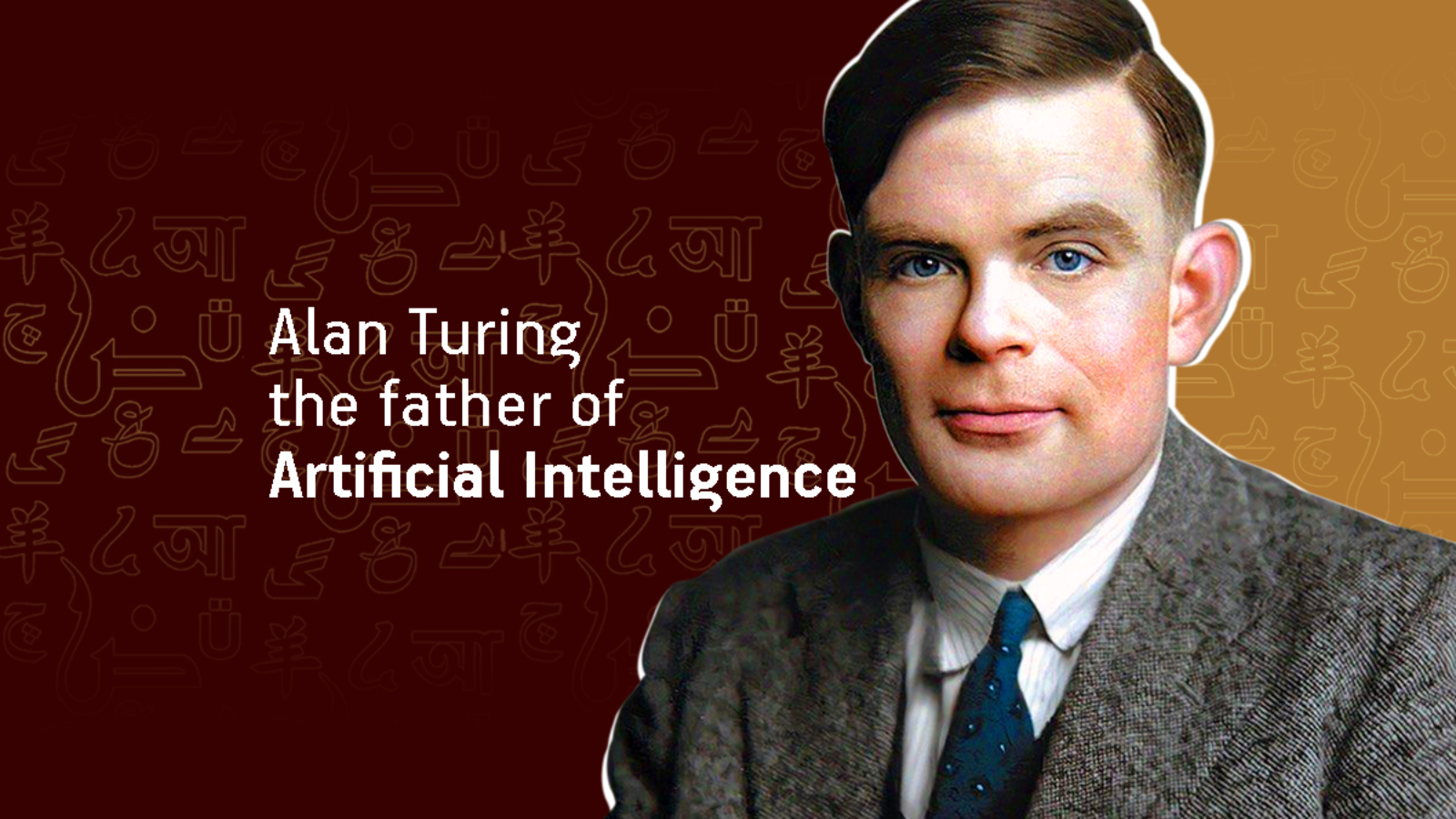

- Alan Turing, a British mathematician, is considered one of the founding fathers of theoretical computer science and artificial intelligence. In his seminal 1950 paper, “Computing Machinery and Intelligence,” Turing proposed the Turing Test, a test of a machine’s ability to exhibit intelligent behavior equivalent to, or indistinguishable from, that of a human.

The Turing Test remains an important benchmark in the field of AI, although it has been criticized for being too anthropocentric and for failing to capture the full range of human intelligence.

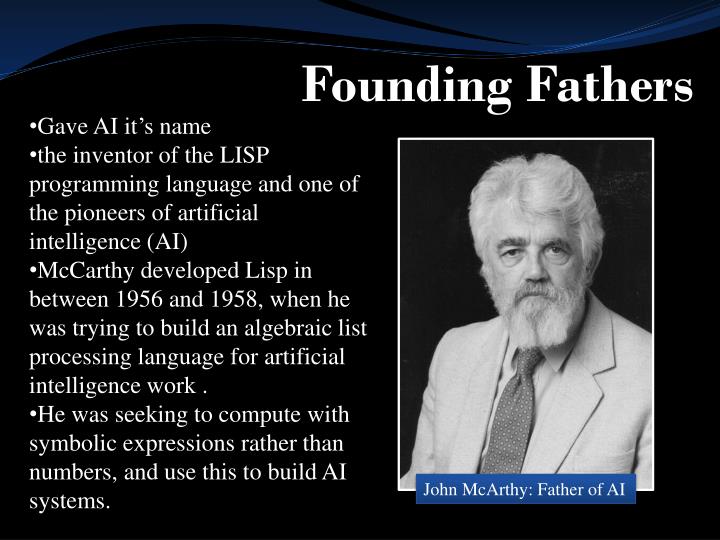

- John McCarthy, an American computer scientist, is considered the “father of AI.” He coined the term “artificial intelligence” and organized the Dartmouth Summer Research Project on Artificial Intelligence in 1956, which is widely considered to be the birth of the field of AI.

McCarthy’s work focused on developing programming languages and techniques for AI, including the LISP programming language, which is still widely used in AI research today.

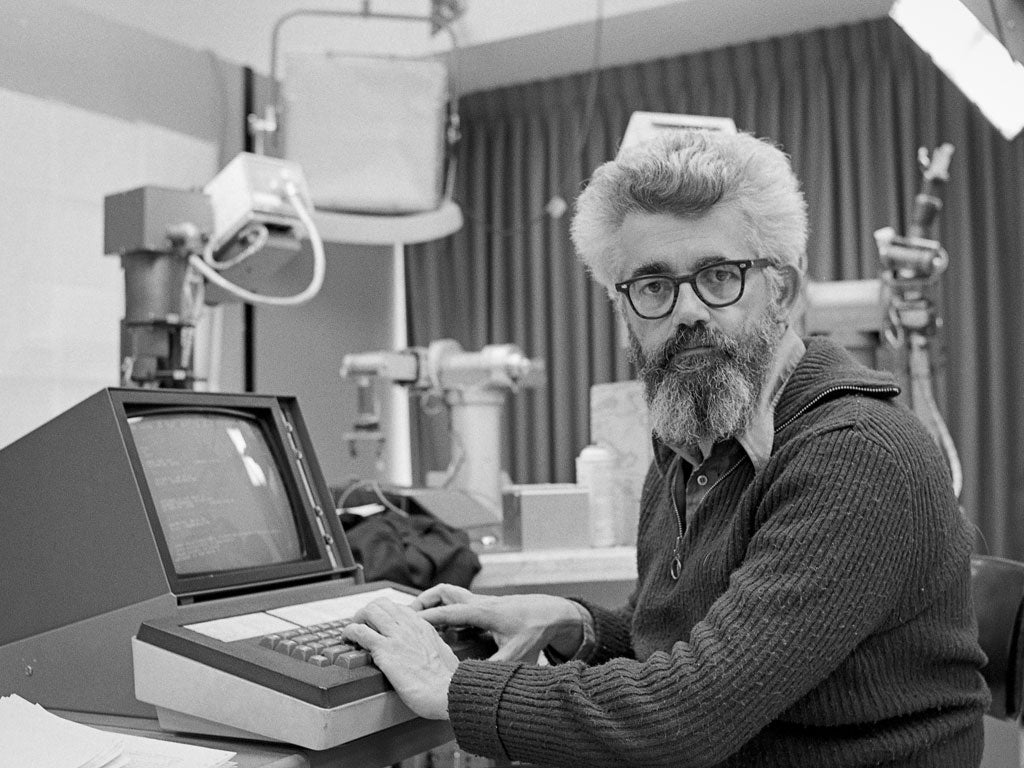

- Marvin Minsky, an American cognitive scientist, was a pioneer in the field of AI, particularly in the areas of artificial neural networks and machine learning. He was a co-founder of the MIT Artificial Intelligence Laboratory and made significant contributions to the development of early AI systems.

Minsky was also a strong advocate for the importance of interdisciplinary research in AI, believing that it was essential to draw on insights from fields such as psychology, linguistics, and neuroscience to develop truly intelligent machines.

- Claude Shannon, an American mathematician and electrical engineer, is known for his work on information theory, which has had a profound impact on the development of AI. Shannon’s work on the mathematical theory of communication laid the foundation for the development of modern communication systems, including the internet, and also provided a framework for understanding how information is processed and transmitted.

His work has been instrumental in the development of AI algorithms for natural language processing, machine learning, and other areas.

The Dartmouth Conference

The Dartmouth Conference, held in 1956, is widely considered to be the birth of the field of AI. The conference was organized by John McCarthy, Marvin Minsky, Claude Shannon, and Nathaniel Rochester, and brought together a group of leading researchers in the field to discuss the potential of AI.

The conference participants agreed that AI was a worthy field of study and that it had the potential to revolutionize many aspects of human life.The Dartmouth Conference was a significant milestone in the history of AI. It marked the beginning of a period of intense research and development in the field, which led to the development of many important AI technologies, such as expert systems, natural language processing, and machine learning.

Key Figures in AI’s Development

The field of artificial intelligence (AI) owes its existence to the pioneering work of several brilliant minds who laid the groundwork for this transformative technology. Their contributions, spanning decades, have shaped the trajectory of AI research and development, leading to the advancements we see today.

Early Pioneers and Foundational Concepts

The origins of AI can be traced back to the mid-20th century, with the emergence of key figures who contributed significantly to the field’s nascent stages.

- Alan Turing(1912-1954), a British mathematician and computer scientist, is considered the father of theoretical computer science and artificial intelligence. His seminal 1950 paper, “Computing Machinery and Intelligence,” introduced the Turing Test, a benchmark for determining a machine’s ability to exhibit intelligent behavior.

The Turing Test remains a cornerstone of AI research, sparking debate about the nature of intelligence and the possibility of creating truly intelligent machines.

- John McCarthy(1927-2011), an American computer scientist, coined the term “artificial intelligence” in 1955 and organized the Dartmouth Summer Research Project on Artificial Intelligence, widely considered the birthplace of AI as a field. McCarthy’s contributions to AI include the development of Lisp, a programming language designed for AI applications, and his work on formalizing the concept of intelligence.

- Claude Shannon(1916-2001), an American mathematician and electrical engineer, is known for his work on information theory, which laid the foundation for understanding and quantifying information. Shannon’s work on Boolean algebra and digital circuits was instrumental in the development of computers, which became essential tools for AI research.

- Marvin Minsky(1927-2016), an American cognitive scientist, is considered one of the founding fathers of AI. Minsky’s contributions include his work on artificial neural networks, machine learning, and cognitive psychology. He co-founded the MIT Artificial Intelligence Laboratory (MIT AI Lab) in 1959 and was a leading figure in the development of AI theory and practice.

Machine Learning: The Power of Data

Machine learning, a subfield of AI, enables computers to learn from data without explicit programming. This paradigm shift revolutionized AI, allowing systems to adapt and improve their performance over time.

- Arthur Samuel(1901-1990), an American computer scientist, is credited with coining the term “machine learning” in 1959. Samuel’s work on checkers-playing programs demonstrated the potential of computers to learn from experience. His program, developed in the 1950s, became the first self-learning AI system to achieve human-level performance in a complex game.

- Frank Rosenblatt(1928-1971), an American psychologist, developed the Perceptron, the first artificial neural network capable of learning. This groundbreaking invention marked a significant step towards creating machines that could learn from data and adapt to new situations. The Perceptron’s success sparked renewed interest in neural networks and laid the foundation for the development of more sophisticated learning algorithms.

Natural Language Processing: Understanding Human Communication

Natural language processing (NLP) focuses on enabling computers to understand, interpret, and generate human language. This field has enabled AI systems to interact with humans in a more natural and intuitive way.

- Noam Chomsky(born 1928), an American linguist, revolutionized the field of linguistics with his theory of generative grammar. Chomsky’s work provided a formal framework for understanding the structure and rules of language, paving the way for NLP research. His theories have had a profound impact on the development of language models and machine translation systems.

- George Miller(1920-2012), an American psychologist, is known for his research on human cognition and language. Miller’s work on the limitations of short-term memory and the concept of “chunks” of information contributed significantly to our understanding of how humans process language. These insights have been applied to the development of NLP systems, particularly in areas like speech recognition and text comprehension.

Robotics: Building Intelligent Machines

Robotics, the field of designing, constructing, operating, and applying robots, has been intertwined with AI since its inception. AI algorithms provide robots with the intelligence needed to perform complex tasks, navigate environments, and interact with humans.

- Joseph Engelberger(1925-2015), an American engineer, is known as the “father of robotics” for his pioneering work in developing the first industrial robot, Unimate, in 1961. Engelberger’s vision of using robots to automate tasks in manufacturing and other industries revolutionized the field of robotics and paved the way for the development of more sophisticated robotic systems.

- Hans Moravec(born 1948), an Austrian-Canadian roboticist, is known for his work on autonomous robots and his predictions about the future of AI. Moravec’s contributions include the development of the “Moravec’s paradox,” which states that tasks that are easy for humans, such as walking and recognizing objects, are difficult for robots, while tasks that are difficult for humans, such as complex mathematical calculations, are relatively easy for computers.

This paradox highlights the challenges and opportunities in developing robots that can match human capabilities.

A Timeline of AI Milestones

| Year | Milestone | Description |

|---|---|---|

| 1950 | Turing Test proposed | Alan Turing introduces the Turing Test, a benchmark for determining a machine’s ability to exhibit intelligent behavior. |

| 1956 | Dartmouth Summer Research Project | The Dartmouth Summer Research Project, organized by John McCarthy, is widely considered the birthplace of AI as a field. |

| 1959 | First self-learning checkers program | Arthur Samuel develops a checkers-playing program that learns from experience, demonstrating the potential of machine learning. |

| 1961 | First industrial robot | Joseph Engelberger develops Unimate, the first industrial robot, marking a significant milestone in the development of robotics. |

| 1966 | ELIZA chatbot | Joseph Weizenbaum creates ELIZA, a chatbot that simulates conversation with a psychotherapist, demonstrating the potential of natural language processing. |

| 1972 | SHRDLU program | Terry Winograd develops SHRDLU, a program that can understand and respond to natural language commands in a limited world, showcasing the potential of AI for natural language interaction. |

| 1980 | Expert systems boom | Expert systems, which use AI to solve problems in specific domains, become popular, leading to the development of systems for medical diagnosis, financial analysis, and other applications. |

| 1997 | Deep Blue defeats Garry Kasparov | IBM’s Deep Blue chess-playing computer defeats chess grandmaster Garry Kasparov, marking a significant milestone in the development of AI for game playing. |

| 2011 | Watson wins Jeopardy! | IBM’s Watson, a question-answering system, wins the game show Jeopardy!, demonstrating the ability of AI to process and understand natural language. |

| 2016 | AlphaGo defeats Go champion | Google DeepMind’s AlphaGo defeats Go champion Lee Sedol, marking a significant breakthrough in the development of AI for complex game playing. |

| 2017 | First self-driving car | Waymo, a subsidiary of Alphabet, launches the first self-driving car service, demonstrating the potential of AI for transportation. |

The Evolution of AI

The field of artificial intelligence (AI) has undergone a remarkable evolution, characterized by a shift from early approaches focused on symbolic reasoning to modern paradigms like deep learning. This journey has been marked by breakthroughs, challenges, and a constant quest to create machines capable of mimicking human intelligence.

The Rise of Symbolic AI

Symbolic AI, also known as good old-fashioned AI (GOFAI), dominated the early years of the field. This approach focused on representing knowledge in the form of symbols and rules, allowing computers to reason and solve problems by manipulating these symbols.

Programs like the General Problem Solver (GPS) and the Logic Theorist were early examples of this paradigm. Symbolic AI was successful in solving well-defined problems with clear rules, such as playing chess or proving mathematical theorems. However, it struggled with tasks requiring real-world knowledge, common sense, and the ability to learn from experience.

The Emergence of Machine Learning

The limitations of symbolic AI led to the development of machine learning (ML), a paradigm that focused on enabling computers to learn from data without explicit programming. Early ML techniques included decision trees, support vector machines, and Bayesian networks. These methods allowed machines to identify patterns and make predictions based on data, paving the way for applications like spam filtering, medical diagnosis, and financial forecasting.

The Deep Learning Revolution

Deep learning, a subfield of ML, emerged in the 2010s and revolutionized AI. Deep learning algorithms, inspired by the structure of the human brain, use artificial neural networks with multiple layers to extract complex features from data. This ability to learn hierarchical representations has led to breakthroughs in areas such as image recognition, natural language processing, and speech synthesis.

Deep learning has fueled the development of powerful AI systems like self-driving cars, virtual assistants, and personalized recommendations.

Comparing AI Paradigms

| Paradigm | Strengths | Limitations |

|---|---|---|

| Symbolic AI |

|

|

| Machine Learning |

|

|

| Deep Learning |

|

|

Impact of AI Techniques on Industries

| Industry | AI Technique | Impact |

|---|---|---|

| Healthcare | Deep learning for medical image analysis | Improved diagnosis, personalized treatment plans, and drug discovery |

| Finance | Machine learning for fraud detection and risk assessment | Enhanced security, improved decision-making, and reduced losses |

| Manufacturing | Robotics and computer vision for automation | Increased efficiency, reduced costs, and improved product quality |

| Retail | Recommender systems and chatbots for customer service | Personalized shopping experiences, improved customer satisfaction, and increased sales |

| Transportation | Autonomous vehicles and traffic management systems | Enhanced safety, reduced congestion, and improved fuel efficiency |

The Impact of AI on Society

Artificial intelligence (AI) is rapidly transforming various aspects of our lives, with profound implications for society. From healthcare to transportation, AI offers a range of potential benefits, but also presents ethical considerations and challenges. This section explores the multifaceted impact of AI on society, examining its potential benefits, ethical implications, and potential risks.

Benefits of AI in Various Fields

AI has the potential to revolutionize numerous fields, improving efficiency, accuracy, and access to services. Here are some examples:

- Healthcare:AI can assist in disease diagnosis, drug discovery, personalized treatment plans, and robotic surgery. AI-powered systems can analyze vast amounts of medical data to identify patterns and predict potential health risks, enabling early intervention and more effective treatment strategies.

- Education:AI can personalize learning experiences, provide adaptive tutoring, and automate administrative tasks. AI-powered educational platforms can assess student progress, identify areas needing improvement, and offer customized learning materials, catering to individual learning styles and needs.

- Transportation:AI is driving the development of autonomous vehicles, which have the potential to improve road safety, reduce traffic congestion, and enhance accessibility for people with disabilities. AI-powered traffic management systems can optimize traffic flow and reduce travel time.

Ethical Considerations in AI Development and Deployment

The rapid advancement of AI raises significant ethical concerns that need to be addressed. These considerations include:

- Bias and Fairness:AI systems can inherit biases from the data they are trained on, leading to discriminatory outcomes. It is crucial to ensure that AI algorithms are fair, unbiased, and do not perpetuate existing social inequalities.

- Privacy and Data Security:AI applications often require access to large amounts of personal data. Protecting user privacy and ensuring the secure handling of sensitive information are essential ethical considerations.

- Transparency and Explainability:AI systems can be complex and opaque, making it difficult to understand how they reach their decisions. Ensuring transparency and explainability is crucial for building trust and accountability in AI systems.

Potential Risks and Challenges of AI

While AI offers significant potential benefits, it also presents certain risks and challenges that need to be carefully considered. These include:

- Job Displacement:AI automation has the potential to displace workers in certain sectors, leading to unemployment and economic disruption. It is essential to address the potential impact on employment and invest in retraining programs to prepare workers for the changing job market.

- Weaponization of AI:The development of autonomous weapons systems raises serious ethical and security concerns. It is crucial to establish international regulations and ethical guidelines to prevent the misuse of AI in warfare.

- Existential Risks:Some experts warn of the potential for superintelligent AI to pose existential risks to humanity. While this remains a speculative concern, it highlights the importance of responsible AI development and research.

The Future of Artificial Intelligence

The future of artificial intelligence (AI) holds immense potential for transforming various aspects of human life. Advancements in AI research and development, coupled with increasing computational power, are paving the way for unprecedented breakthroughs.

Emerging Trends in AI Research and Development

Emerging trends in AI research and development are driving innovation and pushing the boundaries of what AI can achieve. Two key areas of focus are quantum computing and neuromorphic computing.

Quantum Computing

Quantum computing leverages the principles of quantum mechanics to perform computations in ways that are impossible for traditional computers. Quantum computers can handle complex problems that are intractable for classical computers, such as drug discovery, materials science, and financial modeling.

Neuromorphic Computing

Neuromorphic computing aims to mimic the structure and function of the human brain. It uses artificial neural networks that are inspired by biological neurons and synapses. Neuromorphic chips are designed to be energy-efficient and capable of real-time learning and adaptation.

Potential Applications of AI

AI has the potential to revolutionize various industries and aspects of human life. Some key areas where AI is expected to have a significant impact include personalized medicine, climate change, and space exploration.

Personalized Medicine

AI is transforming healthcare by enabling personalized medicine. AI algorithms can analyze vast amounts of patient data, including genetic information, medical history, and lifestyle factors, to identify patterns and predict disease risk. This allows for tailored treatment plans and preventive measures.

Climate Change

AI can play a crucial role in addressing climate change. AI-powered systems can analyze climate data, optimize energy consumption, and develop sustainable solutions. For example, AI can help in monitoring deforestation, predicting extreme weather events, and designing more efficient renewable energy systems.

Space Exploration

AI is becoming increasingly important in space exploration. AI-powered robots can perform tasks in harsh environments, such as exploring distant planets and asteroids. AI can also analyze data from space missions, helping scientists understand the universe better.

The Role of AI in Shaping the Future of Humanity

AI has the potential to shape the future of humanity in profound ways. It can enhance productivity, improve healthcare, and solve complex problems. However, it also raises ethical and societal concerns that need to be addressed.

Ethical Considerations

As AI becomes more powerful, it is crucial to address ethical considerations. These include issues such as bias in algorithms, data privacy, and the potential for job displacement. It is essential to develop ethical frameworks and guidelines for AI development and deployment.

Social Impact

AI will have a significant impact on society. It is expected to create new jobs and industries while also displacing others. It is important to prepare for these changes and ensure that everyone benefits from the advancements in AI.

Final Wrap-Up

The pursuit of AI is a continuous journey, marked by both breakthroughs and challenges. While the “father” of AI may be a contested title, the field’s legacy is undeniably shaped by the contributions of countless individuals. As AI continues to evolve, it is essential to acknowledge the historical context that shaped its development, to appreciate the collective effort that brought us to this point, and to thoughtfully consider the ethical and societal implications of this powerful technology.

Quick FAQs

Is there one definitive “father” of AI?

No, there is no single individual who can be definitively crowned as the “father” of AI. The field’s development was a collaborative effort involving many pioneers.

What was the significance of the Dartmouth Conference?

The Dartmouth Conference in 1956 is widely considered a landmark event in the history of AI. It brought together leading researchers to discuss and formalize the field, marking the official birth of AI as a distinct area of study.

What are some of the key ethical considerations surrounding AI?

Ethical concerns surrounding AI include potential job displacement, bias in algorithms, privacy issues, and the responsible use of AI in autonomous systems.