Artificial intelligence (AI), a field that has captivated imaginations and reshaped the world, is not the product of a single mind but rather the culmination of decades of collaborative effort. From the initial sparks of theoretical concepts to the development of sophisticated algorithms and systems, AI’s journey is interwoven with the contributions of visionary individuals, pioneering institutions, and the collective spirit of researchers.

This exploration delves into the origins of AI, tracing its evolution from the early dreams of computing machines capable of thought to the remarkable advancements that define our present and shape our future. We will examine the key individuals who laid the foundations, the milestones that marked significant progress, and the ongoing research that continues to push the boundaries of what AI can achieve.

The Birth of AI

Artificial intelligence (AI) is a relatively new field, but its roots extend far back in history. The concept of creating machines that can think like humans has fascinated philosophers and scientists for centuries. The journey from early philosophical musings to the sophisticated AI systems we see today has been a long and winding one, shaped by the contributions of numerous pioneers.

Early Concepts and Influences

The idea of artificial intelligence has its roots in ancient myths and legends. Stories about artificial beings, such as the mythical Greek automaton Talos or the Golem of Jewish folklore, suggest that humans have long been intrigued by the possibility of creating intelligent machines.

However, the seeds of modern AI were sown in the mid-20th century, fueled by advancements in computing and a growing understanding of the human brain.

The Birth of AI: Dartmouth Workshop

The Dartmouth Summer Research Project on Artificial Intelligence, held in 1956, is widely considered to be the birthplace of AI as a distinct field of study. Organized by John McCarthy, Marvin Minsky, Claude Shannon, and Nathaniel Rochester, the workshop brought together a group of leading researchers to discuss the possibility of creating machines that could “think” and “learn” like humans.

The workshop itself did not produce any groundbreaking breakthroughs, but it served as a crucial catalyst for the development of AI research.

Key Pioneers and Their Contributions

- Alan Turing: A British mathematician and computer scientist, Turing is considered one of the founding fathers of theoretical computer science and artificial intelligence. His seminal 1950 paper, “Computing Machinery and Intelligence,” introduced the Turing Test, a benchmark for determining whether a machine can exhibit intelligent behavior indistinguishable from a human.

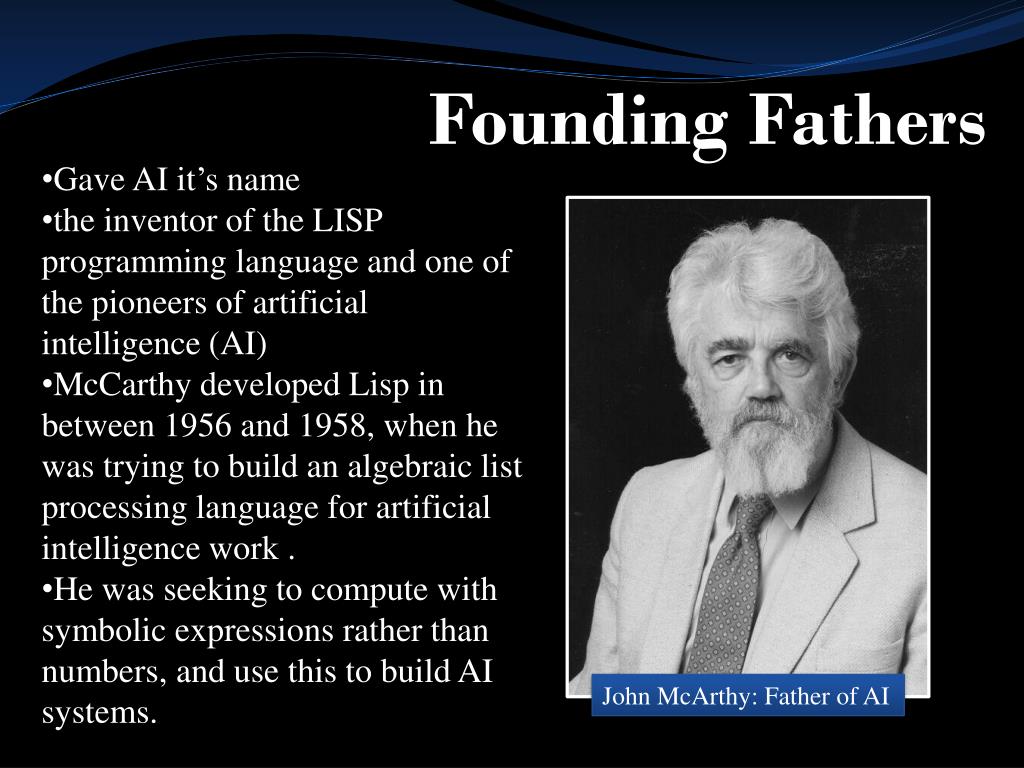

- John McCarthy: An American computer scientist, McCarthy coined the term “artificial intelligence” and is credited with developing the LISP programming language, which became a cornerstone of AI research.

- Marvin Minsky: An American cognitive scientist, Minsky made significant contributions to the fields of artificial intelligence, cognitive psychology, and robotics. He co-founded the MIT Artificial Intelligence Laboratory and authored influential books on AI, such as “Perceptrons” and “The Society of Mind.”

- Arthur Samuel: An American pioneer in computer gaming and artificial intelligence, Samuel developed the first self-learning checkers program in 1952. His work demonstrated the potential of AI for solving complex problems and learning from experience.

Foundational Ideas and Concepts

The Dartmouth workshop and the work of early AI pioneers laid the groundwork for several key concepts that continue to drive AI research today.

- Machine Learning: A branch of AI that focuses on developing algorithms that enable computers to learn from data without explicit programming. Machine learning algorithms can identify patterns in data, make predictions, and improve their performance over time.

- Natural Language Processing (NLP): A field of AI that deals with the interaction between computers and human language. NLP techniques enable computers to understand, interpret, and generate human language, facilitating tasks such as machine translation, text summarization, and chatbot development.

- Robotics: A field of engineering that focuses on the design, construction, operation, and application of robots. Robotics is closely intertwined with AI, as robots are often equipped with AI systems to enable them to perform complex tasks, navigate environments, and interact with humans.

Key Milestones in AI Development

The journey of Artificial Intelligence (AI) has been marked by remarkable breakthroughs, each pushing the boundaries of what machines can achieve. From the early days of simple algorithms to the sophisticated systems we see today, AI has evolved significantly, shaping our world in profound ways.

Early Foundations (1940s-1950s)

This period saw the emergence of foundational concepts and the first attempts to create AI systems.

- 1943: McCulloch-Pitts Neuron: Warren McCulloch and Walter Pitts proposed a mathematical model of a neuron, laying the groundwork for artificial neural networks. This model, inspired by the biological structure of neurons, represented a significant step towards creating artificial systems capable of learning and processing information.

- 1950: Turing Test: Alan Turing proposed the Turing Test, a benchmark for evaluating a machine’s ability to exhibit intelligent behavior indistinguishable from a human. The test, which remains a topic of debate, set the stage for exploring the nature of intelligence and its potential realization in machines.

- 1956: Dartmouth Workshop: This workshop, widely considered the birthplace of AI, brought together leading researchers to discuss the possibilities of “thinking machines.” The term “Artificial Intelligence” was coined at this event, solidifying the field’s identity and attracting further research interest.

The Golden Age (1950s-1970s)

The period saw significant progress in AI research, fueled by optimism and breakthroughs in problem-solving and symbolic reasoning.

- 1959: The General Problem Solver (GPS): Newell, Simon, and Shaw developed the GPS, a program designed to solve a wide range of problems by breaking them down into smaller, more manageable steps. This approach, known as means-ends analysis, demonstrated the potential of AI for tackling complex tasks.

- 1966: ELIZA: Joseph Weizenbaum created ELIZA, a chatbot program that simulated conversation by using pattern recognition and simple rule-based responses. While ELIZA lacked true understanding, it showcased the potential of AI for natural language processing and interaction.

- 1972: SHRDLU: Terry Winograd developed SHRDLU, a program capable of understanding and manipulating objects in a simple virtual world. SHRDLU demonstrated the power of AI for understanding language and reasoning about objects in a limited environment.

AI Winter (1970s-1980s)

Despite early optimism, AI research faced a period of stagnation due to limitations in computing power and a lack of significant breakthroughs.

- Limited Computing Power: The computational resources available at the time were insufficient to handle the complex calculations required for advanced AI systems. This limitation hindered progress in areas such as natural language processing and machine learning.

- Unrealistic Expectations: The early hype surrounding AI led to unrealistic expectations, and the inability to deliver on these promises led to disillusionment and a reduction in funding for research.

The Rise of Expert Systems (1980s)

The development of expert systems, programs designed to emulate the knowledge and reasoning abilities of human experts in specific domains, marked a resurgence in AI research.

- Expert Systems: These systems, often used in areas like medical diagnosis and financial analysis, relied on rule-based reasoning and knowledge representation techniques. Expert systems demonstrated the practical value of AI in specific domains, leading to renewed interest and investment in the field.

- MYCIN: One of the most successful early expert systems, MYCIN, was developed for diagnosing and treating bacterial infections. MYCIN’s ability to provide expert-level advice based on patient data highlighted the potential of AI for improving healthcare.

The Machine Learning Revolution (1990s-Present)

The development of powerful algorithms and increased computational power led to a significant resurgence in AI research, particularly in the field of machine learning.

- Machine Learning: This subfield of AI focuses on enabling computers to learn from data without explicit programming. Machine learning algorithms can identify patterns, make predictions, and improve their performance over time through experience.

- Deep Learning: A subset of machine learning, deep learning uses artificial neural networks with multiple layers to extract complex features from data. Deep learning has revolutionized fields like image recognition, natural language processing, and speech recognition.

Breakthroughs and Milestones (1990s-Present)

The last few decades have witnessed remarkable breakthroughs in AI, driven by advancements in machine learning and deep learning.

- 1997: Deep Blue defeats Garry Kasparov: IBM’s Deep Blue chess-playing computer defeated world chess champion Garry Kasparov, marking a significant milestone in AI’s ability to compete with humans in complex cognitive tasks. This victory highlighted the potential of AI for strategic decision-making and problem-solving.

- 2011: Watson wins Jeopardy!: IBM’s Watson, a question-answering system, defeated human champions on the game show Jeopardy!, demonstrating AI’s ability to process natural language and understand complex questions. Watson’s victory showcased the potential of AI for information retrieval and natural language understanding.

- 2016: AlphaGo defeats Lee Sedol: Google DeepMind’s AlphaGo, a program designed to play Go, defeated world champion Lee Sedol, a significant achievement considering the complexity of the game. AlphaGo’s victory highlighted the power of deep learning for mastering complex strategies and outperforming humans in games that require intuition and creativity.

- 2017: GPT-3: OpenAI’s GPT-3 (Generative Pre-trained Transformer 3) is a large language model capable of generating human-quality text, translating languages, writing different kinds of creative content, and answering your questions in an informative way. GPT-3’s impressive capabilities have demonstrated the potential of AI for creative writing, content generation, and language translation.

The Role of Research Institutions and Companies

The development of AI has been driven by a synergistic interplay between research institutions and private companies. Both play crucial roles in pushing the boundaries of AI research and development, each with its own unique strengths and perspectives.

Contributions of Leading Research Institutions

Research institutions, such as MIT, Stanford, and Google AI, have played a pivotal role in laying the theoretical foundations of AI and fostering groundbreaking innovations.

- MIT: The MIT Artificial Intelligence Laboratory (CSAIL) has been a leading force in AI research for decades, making significant contributions to areas such as natural language processing, computer vision, and robotics. The laboratory has produced numerous influential researchers and has been instrumental in developing key AI algorithms and techniques.

- Stanford: The Stanford Artificial Intelligence Laboratory (SAIL) is another renowned institution that has contributed significantly to AI research. Its focus on machine learning, robotics, and computer vision has led to groundbreaking advancements in these fields. Stanford’s AI researchers have also made significant contributions to the development of deep learning algorithms.

- Google AI: Google’s AI research team has made significant contributions to the development of AI systems, particularly in areas such as machine learning, deep learning, and natural language processing. Google’s AI research has led to the development of powerful AI tools and platforms, such as TensorFlow, which are widely used in industry and academia.

Role of Private Companies in Driving AI Innovation

Private companies have played a crucial role in translating AI research into real-world applications and driving innovation through their significant investments and focus on commercialization.

- Google: Google has been a major player in the development and deployment of AI technologies, investing heavily in research and development and building AI-powered products and services. Examples include Google Search, Google Assistant, and Google Translate, which leverage AI to enhance user experience and efficiency.

- Microsoft: Microsoft has also been a key player in AI, investing in research and developing AI platforms and services like Azure AI and Microsoft Cognitive Services. These services empower businesses to integrate AI into their operations and create innovative applications.

- Amazon: Amazon’s AI initiatives have focused on developing AI-powered services for its e-commerce platform, as well as for other businesses. Amazon Web Services (AWS) provides cloud-based AI services that allow businesses to leverage AI capabilities without the need for significant infrastructure investment.

Research Focus and Approaches of Academic Institutions and Private Companies

While both academic institutions and private companies contribute significantly to AI research and development, their research focus and approaches often differ.

- Academic Institutions: Academic institutions tend to focus on fundamental research, exploring new theoretical concepts and algorithms. Their research is often driven by curiosity and a desire to advance the field of AI. Academic research often emphasizes long-term goals and the development of new knowledge, with less immediate focus on commercialization.

- Private Companies: Private companies, on the other hand, are more focused on developing AI solutions that can be commercialized and deployed in real-world applications. Their research is often driven by market demands and the need to create products and services that generate revenue.

Private companies tend to focus on short-term goals and practical applications of AI, with a strong emphasis on scalability and efficiency.

The Collective Effort of AI Researchers

The development of artificial intelligence (AI) is not the work of a single individual or even a small group of researchers. It is a collective endeavor, with countless individuals from diverse backgrounds contributing their expertise and insights. The collaborative nature of AI research is a driving force behind its rapid progress, fostering knowledge sharing, and promoting innovation.

Prominent AI Conferences and Publications

AI conferences and publications serve as crucial platforms for disseminating research findings, fostering collaboration, and shaping the direction of the field. They provide a space for researchers to present their work, engage in discussions, and learn from their peers.

- The Association for the Advancement of Artificial Intelligence (AAAI) Conference:One of the most prestigious AI conferences, the AAAI Conference brings together leading researchers to present cutting-edge work in all areas of AI. The conference is known for its high-quality technical program, which includes keynote speeches, invited talks, and research papers.

- The International Conference on Machine Learning (ICML):A premier conference focusing on machine learning, ICML is a gathering of researchers from academia and industry who present their latest findings and discuss emerging trends in the field.

- The Neural Information Processing Systems (NeurIPS) Conference:NeurIPS is a highly influential conference that covers topics such as deep learning, machine learning, and computational neuroscience. The conference is renowned for its rigorous peer-review process and its high-quality research papers.

- The Journal of Artificial Intelligence Research (JAIR):JAIR is a leading journal that publishes original research in all areas of artificial intelligence. The journal is known for its high-quality papers and its rigorous peer-review process.

- The ACM Transactions on Intelligent Systems and Technology (TIST):TIST is a journal that publishes high-quality research papers on the theory, design, and development of intelligent systems and technologies.

Examples of Collaborative Research Efforts

Numerous examples showcase the collaborative nature of AI research and the contributions of different research groups and individuals to specific areas of AI development.

- Deep Learning:The development of deep learning, a powerful subfield of AI, is a testament to the collaborative nature of research. Researchers from various institutions, including the University of Toronto, Stanford University, and Google, have contributed to the development of key concepts and algorithms, such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs).

- Natural Language Processing (NLP):NLP, which focuses on enabling computers to understand and process human language, has benefited from the collaborative efforts of researchers worldwide. For example, the development of large language models (LLMs) like GPT-3 has involved contributions from researchers at OpenAI, Google, and other institutions.

- Computer Vision:The field of computer vision, which aims to enable computers to “see” and interpret images, has witnessed significant progress due to collaborative research. Researchers from universities and companies like Facebook and Microsoft have made substantial contributions to the development of object detection algorithms, image segmentation techniques, and other key advancements.

The Ongoing Evolution of AI

AI research and development are rapidly evolving, driven by continuous advancements in computing power, data availability, and algorithmic sophistication. The field is characterized by a dynamic interplay of theoretical breakthroughs, practical applications, and ethical considerations.

Current Trends and Challenges

AI research is currently experiencing a surge of activity in several key areas.

- Deep Learning:This subfield of machine learning has achieved remarkable success in tasks like image recognition, natural language processing, and game playing. Deep learning models, with their multi-layered artificial neural networks, are capable of learning complex patterns from vast amounts of data.

However, the black-box nature of deep learning models raises concerns about interpretability and explainability.

- Reinforcement Learning:This approach involves training AI agents to learn through trial and error, maximizing rewards by interacting with their environment. Reinforcement learning has shown promise in areas like robotics, game playing, and autonomous driving. However, scaling reinforcement learning to complex real-world scenarios remains a challenge.

- Explainable AI (XAI):As AI systems become increasingly complex, the need for explainability and transparency is paramount. XAI focuses on developing methods to make AI decisions understandable to humans, fostering trust and accountability. This area is crucial for addressing concerns about bias, fairness, and safety in AI systems.

- Generative AI:This branch of AI focuses on creating new content, such as text, images, audio, and video. Generative models, like Generative Adversarial Networks (GANs) and large language models (LLMs), have demonstrated impressive capabilities in generating realistic and creative outputs. However, the potential misuse of generative AI for malicious purposes, such as creating deepfakes or spreading misinformation, requires careful consideration.

Potential Future Directions

The future of AI promises exciting advancements across diverse domains.

- AI for Scientific Discovery:AI is poised to revolutionize scientific research by automating experiments, analyzing massive datasets, and accelerating the discovery of new knowledge. This includes areas like drug discovery, materials science, and climate modeling.

- AI-Powered Healthcare:AI has the potential to transform healthcare by enabling personalized medicine, early disease detection, and more efficient drug development. AI-powered tools can assist doctors in diagnosis, treatment planning, and patient monitoring.

- AI for Sustainable Development:AI can contribute to addressing global challenges like climate change, resource scarcity, and poverty. AI-powered systems can optimize energy consumption, improve agricultural practices, and develop innovative solutions for sustainable development.

- AI and the Future of Work:AI is expected to automate many tasks, leading to changes in the workforce. While this could lead to job displacement, it also presents opportunities for new jobs and skills development. The ethical implications of AI in the workplace, including bias and fairness, require careful consideration.

Key Areas of AI Research

| Area | Current Status | Potential Future Impact |

|---|---|---|

| Natural Language Processing (NLP) | Significant progress in language understanding and generation, with applications in machine translation, text summarization, and chatbot development. | Advanced NLP systems will enable more natural and seamless human-computer interaction, facilitating personalized communication, information retrieval, and content creation. |

| Computer Vision | Rapid advancements in image and video recognition, object detection, and scene understanding, driving applications in autonomous vehicles, medical imaging, and surveillance. | Computer vision will become increasingly sophisticated, enabling AI systems to perceive and interpret the world with human-like capabilities, leading to enhanced safety, efficiency, and understanding. |

| Robotics | Development of increasingly dexterous and intelligent robots, capable of performing tasks in complex environments, with applications in manufacturing, logistics, and healthcare. | Robots will become more ubiquitous, collaborating with humans to perform tasks that are dangerous, repetitive, or require specialized skills, leading to increased productivity and efficiency. |

| AI Ethics | Growing awareness of the ethical implications of AI, including bias, fairness, privacy, and safety. Research is focused on developing ethical frameworks and guidelines for AI development and deployment. | AI ethics will become a critical field, shaping the responsible and equitable development and use of AI, ensuring that AI benefits all of humanity. |

Closing Summary

The story of AI is one of continuous evolution, driven by the relentless pursuit of knowledge and the collaborative spirit of researchers. As we stand on the cusp of a new era defined by AI’s transformative potential, it is essential to acknowledge the pioneers who laid the groundwork and the collective efforts that continue to shape this dynamic field.

The future of AI holds vast possibilities, and it is through the combined ingenuity and dedication of researchers, institutions, and companies that we will continue to unlock its potential and navigate its ethical complexities.

Popular Questions

Is AI a recent invention?

While AI has experienced a surge in popularity and advancements in recent years, its roots can be traced back to the mid-20th century. The foundational concepts and early research laid the groundwork for the field’s rapid development in the latter part of the century.

What are the main challenges in AI development?

AI research faces numerous challenges, including the development of robust algorithms that can handle complex tasks, the ethical implications of AI systems, and the need for large datasets to train and improve AI models.

How can I contribute to AI research?

There are various ways to contribute to AI research, even without a formal background in computer science. You can explore open-source projects, participate in online forums, or contribute to AI-related datasets. Additionally, pursuing a degree in computer science or related fields can provide a pathway to direct involvement in AI research.