Artificial intelligence (AI) models are becoming increasingly sophisticated and integrated into our lives, offering a wide range of benefits. However, this advancement raises critical concerns about data privacy. As AI models learn from vast amounts of data, including user interactions, it becomes crucial to understand how to safeguard personal information and minimize the risks associated with sharing data with these systems.

This guide explores the potential privacy implications of interacting with AI models, providing practical strategies and best practices to protect your personal information. We delve into the intricacies of data input and output control, explore the privacy settings available within AI platforms, and discuss techniques like data anonymization and encryption to enhance privacy.

Understanding Privacy Concerns

Sharing information with AI models, such as Kami, can raise privacy concerns. It’s crucial to understand the potential risks involved and prioritize data security and privacy during interactions with AI.

Potential Risks of Sharing Information with AI Models

AI models are trained on vast datasets, and the information you share with them can be used to enhance their learning process. However, this can also lead to potential privacy risks:

- Data misuse:Your personal information could be used for purposes you didn’t consent to, such as targeted advertising or profiling.

- Data breaches:If the AI model’s data storage is compromised, your information could be accessed by unauthorized individuals.

- Privacy violations:AI models can be used to infer sensitive information about you, even if you haven’t explicitly shared it.

Importance of Data Security and Privacy

Data security and privacy are paramount when interacting with AI models. It’s crucial to:

- Understand the AI model’s privacy policy:Review the policy carefully to understand how your data is collected, used, and protected.

- Minimize the information you share:Avoid sharing sensitive information unless absolutely necessary.

- Use strong passwords and security measures:Protect your account from unauthorized access.

Examples of Personal Data Misuse

Several real-world examples illustrate how personal data can be misused or compromised:

- Targeted advertising:Companies use AI models to analyze user data and target them with personalized ads, sometimes based on sensitive information like health or financial status.

- Facial recognition:AI-powered facial recognition systems can be used for surveillance and tracking, raising concerns about privacy and potential misuse.

- Data leaks:Major data breaches have exposed millions of users’ personal information, leading to identity theft and other security risks.

Data Input and Output Control

Understanding how user input is handled by AI models and how output is controlled is crucial for ensuring privacy. This section explores these aspects in detail, highlighting methods to mitigate potential privacy risks.

User Input Handling

AI models learn from the data they are trained on, including user input. This input can be text, images, audio, or any other type of data that the model is designed to process. The way this input is handled can significantly impact privacy.

- Data Preprocessing:Before being fed into the model, user input is typically preprocessed. This may involve cleaning the data, removing irrelevant information, and converting it into a format suitable for the model. This process can introduce privacy risks if sensitive information is not adequately masked or removed.

- Model Training:During training, the model learns patterns and relationships from the data, including user input. This can lead to the model memorizing sensitive information, especially if the training data is not anonymized or properly sanitized.

- Data Storage:User input may be stored temporarily or permanently for various purposes, such as model improvement or debugging. This storage can pose privacy risks if the data is not secured adequately or if access controls are not implemented properly.

Data Sharing and Privacy Implications

The following table Artikels different types of data that can be shared with AI models and their potential privacy implications:

| Data Type | Privacy Implications |

|---|---|

| Personal Information (e.g., name, address, phone number) | High risk of identity theft, fraud, and unauthorized access to sensitive data. |

| Medical Records | High risk of privacy breaches, discrimination, and unauthorized access to sensitive health information. |

| Financial Data | High risk of financial fraud, identity theft, and unauthorized access to financial records. |

| Location Data | Moderate risk of tracking, profiling, and unauthorized access to location information. |

| Social Media Posts | Moderate risk of exposure of personal opinions, beliefs, and relationships. |

| Images and Videos | Moderate risk of facial recognition, unauthorized use, and privacy violations. |

| Audio Recordings | Moderate risk of eavesdropping, unauthorized use, and privacy violations. |

Output Control

Controlling the output generated by AI models is crucial for preventing the disclosure of sensitive information. Several techniques can be employed:

- Data Masking and Redaction:Sensitive information can be masked or redacted from the output before it is presented to the user. This involves replacing sensitive data with placeholder values or removing it altogether.

- Output Filtering:AI models can be trained to filter out potentially sensitive information from their output. This involves identifying and removing specific patterns or s associated with sensitive data.

- Privacy-Preserving Techniques:Techniques like differential privacy and homomorphic encryption can be used to protect sensitive information during model training and inference. These techniques add noise or obfuscate data while preserving the model’s functionality.

- Model Auditing and Monitoring:Regularly auditing the model’s output and monitoring its performance can help identify potential privacy breaches. This involves analyzing the model’s predictions and identifying any instances where sensitive information might be revealed.

Account Settings and Privacy Options

Most AI platforms offer various settings and privacy options to control data usage and sharing. These settings allow users to customize their privacy preferences and ensure their data is handled according to their wishes.

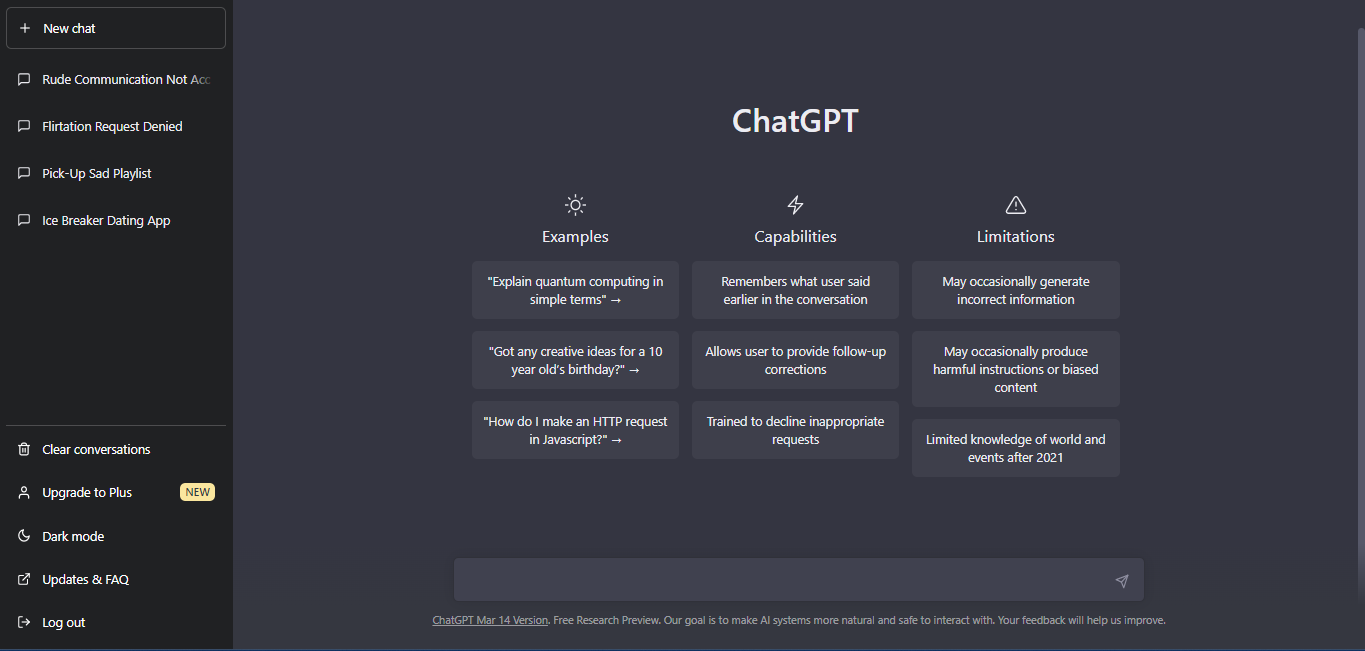

Privacy Settings Within Kami

Kami provides several privacy settings to manage data sharing and usage. Users can access these settings within their account dashboard.

- Data Deletion: Users can delete their chat history, including conversations, prompts, and responses. This removes the data from Kami’s servers and prevents it from being used for training or other purposes.

- Data Usage: Kami allows users to choose how their data is used. Users can opt out of having their data used for training AI models or for personalized experiences.

- Data Visibility: Users can control who can see their chat history. They can choose to make their history public, private, or only visible to specific individuals.

- Cookie Management: Kami uses cookies to enhance user experience and track usage. Users can manage cookie settings to control what data is collected and shared.

Configuring Privacy Options in Kami

To configure privacy options in Kami, follow these steps:

- Access Account Settings: Log in to your Kami account and navigate to the account settings page. This can usually be found under a “Profile,” “Settings,” or “Account” tab.

- Select Privacy Options: Look for the “Privacy” section within your account settings. Here, you will find options related to data deletion, usage, visibility, and cookie management.

- Adjust Settings: Select the desired privacy settings based on your preferences. For example, you can choose to delete your chat history, opt out of data usage for training, or make your history private.

- Save Changes: Once you have made your selections, save the changes to ensure your privacy settings are applied.

Privacy Features Comparison Across AI Platforms

Different AI platforms offer varying levels of privacy features. Some platforms provide more granular control over data sharing and usage, while others may have more limited options.

- Google AI Platform: Offers options to control data usage, including consent for model training and data sharing with third parties. It also allows users to delete their data and manage cookies.

- Microsoft Azure AI: Provides features like data encryption, access control, and data retention policies. Users can control how their data is used and shared within the platform.

- Amazon SageMaker: Offers data encryption, access control, and data governance features. Users can manage data access and usage within the platform.

Data Anonymization and Encryption

Data anonymization and encryption are essential techniques for safeguarding user privacy when interacting with AI models like Kami. These methods aim to protect sensitive information by removing personally identifiable details and securing data transmission.

Data Anonymization

Data anonymization involves transforming data to remove or replace personally identifiable information (PII), such as names, addresses, and phone numbers. The goal is to make it impossible to identify individuals from the data, while preserving its usefulness for analysis and training AI models.

Several anonymization techniques are available:

- Generalization:Replacing specific values with broader categories. For example, replacing a precise age with an age range (e.g., 25-34).

- Suppression:Removing sensitive data entirely. For example, deleting columns containing names or addresses from a dataset.

- Pseudonymization:Replacing PII with unique identifiers, making it difficult to link data to specific individuals. This technique is often used in conjunction with other anonymization methods.

Encryption Techniques

Encryption plays a crucial role in securing data transmitted between users and AI models. It involves transforming data into an unreadable format, making it inaccessible to unauthorized parties. Common encryption techniques include:

- Symmetric-key encryption:Uses the same key for both encryption and decryption. This method is faster but requires secure key management to prevent unauthorized access.

- Asymmetric-key encryption:Uses separate keys for encryption and decryption. This approach offers better security, as only the intended recipient possesses the decryption key.

- End-to-end encryption:Encrypts data at the source and decrypts it only at the intended recipient, ensuring data privacy throughout its journey.

Implementation Examples

- Healthcare:Anonymizing patient data before sharing it with AI models for disease prediction or treatment optimization. This ensures patient privacy while allowing researchers to leverage valuable medical insights.

- Financial Services:Encrypting customer financial data during online transactions, protecting sensitive information from unauthorized access.

- Social Media:Anonymizing user data before sharing it with AI models for sentiment analysis or recommendation systems, preserving user privacy while enabling valuable insights.

Last Word

Navigating the intersection of AI and privacy requires a proactive approach. By understanding the potential risks, implementing appropriate privacy controls, and embracing responsible AI usage practices, individuals can mitigate the vulnerabilities associated with sharing data with AI models. This guide provides a framework for informed decision-making, empowering users to prioritize privacy while harnessing the benefits of AI.

Helpful Answers

How do I know if an AI model is collecting my data?

Most AI platforms have privacy policies that Artikel their data collection practices. Carefully review these policies to understand what information is collected, how it’s used, and what controls you have over your data.

Can I use AI models without sharing any personal information?

While it’s challenging to completely avoid sharing any data, some AI models offer anonymized or aggregated data options that minimize the collection of personal information. Consider using these options when available.

What are the potential consequences of sharing personal data with AI models?

The potential consequences of sharing personal data with AI models can range from unwanted advertising and targeted marketing to identity theft and privacy breaches. It’s crucial to be aware of these risks and take steps to mitigate them.