Large language models (LLMs) have revolutionized the field of artificial intelligence, exhibiting remarkable abilities in natural language processing tasks like text generation, translation, and question answering. However, a key aspect of their functionality often sparks debate: memory. Does an LLM like Kami truly possess memory, and if so, how does it compare to human memory?

This exploration delves into the concept of memory in AI, examining the architecture and mechanisms behind Kami’s memory capabilities. We will analyze its limitations and implications, drawing comparisons with other prominent AI models to gain a comprehensive understanding of the role memory plays in shaping the performance and potential of these sophisticated systems.

Understanding Memory in AI

Memory is a fundamental concept in artificial intelligence (AI), playing a crucial role in enabling AI systems to learn, adapt, and perform complex tasks. While AI memory shares similarities with human memory, it also exhibits significant differences in its structure, function, and implementation.

Types of Memory in AI

Different types of memory are used in AI systems, each serving a specific purpose. These include:

- Short-term memory:This type of memory stores information temporarily, similar to human working memory. It is used to hold data that is currently being processed or accessed. For example, in a chatbot, short-term memory stores the current conversation context to maintain a coherent dialogue.

- Long-term memory:This type of memory stores information persistently, akin to human long-term memory. It is used to store knowledge, experiences, and learned patterns that can be accessed and used in future tasks. For example, a language model like GPT-3 utilizes long-term memory to store vast amounts of text data, enabling it to generate coherent and contextually relevant responses.

- Episodic memory:This type of memory stores specific events or experiences, similar to human episodic memory. It is used to recall past occurrences and their associated context. For example, a self-driving car uses episodic memory to learn from past driving experiences, such as recognizing traffic patterns or identifying obstacles.

- Semantic memory:This type of memory stores general knowledge and facts, similar to human semantic memory. It is used to access and utilize information about the world, such as definitions, concepts, and relationships. For example, a question-answering system uses semantic memory to retrieve relevant information from a knowledge base to answer user queries.

Importance of Memory for AI Tasks

Memory is crucial for AI systems to perform various tasks effectively. Here are some key examples:

- Language understanding:AI systems like chatbots and language models rely on memory to understand the context of conversations, track the flow of dialogue, and generate coherent responses.

- Learning:AI systems use memory to store learned patterns, rules, and experiences, allowing them to improve their performance over time. For example, a machine learning model uses memory to store training data and update its parameters based on the learned patterns.

- Reasoning:AI systems use memory to store and retrieve relevant information, enabling them to draw inferences and make decisions. For example, a knowledge-based system uses memory to store facts and rules, allowing it to reason about new situations and solve problems.

Kami’s Architecture and Memory

Kami, a large language model (LLM) developed by OpenAI, exhibits remarkable capabilities in generating human-like text, translating languages, writing different kinds of creative content, and answering your questions in an informative way. This sophisticated AI system relies on a complex architecture and intricate memory mechanisms to achieve its impressive performance.

Kami’s Architecture

Kami’s architecture is based on a transformer neural network, a type of deep learning model that excels at processing sequential data, such as text. The transformer network consists of multiple layers, each composed of two sub-layers: a multi-head self-attention layer and a feed-forward neural network.

- Multi-head self-attention: This layer allows the model to attend to different parts of the input sequence simultaneously, capturing complex relationships between words and phrases. It helps the model understand the context and meaning of the input text.

- Feed-forward neural network: This layer applies non-linear transformations to the output of the self-attention layer, further enhancing the model’s ability to represent and process information.

The transformer network is trained on a massive dataset of text and code, enabling Kami to learn patterns and relationships in language. This training process involves adjusting the model’s parameters to minimize the difference between its predictions and the actual target outputs.

Memory Mechanisms in Kami

Kami utilizes a combination of short-term and long-term memory mechanisms to process and store information effectively.

Short-Term Memory

Kami’s short-term memory is represented by the internal state of the transformer network during the processing of a specific input sequence. This state is dynamic and constantly updated as the model processes each word or token in the input. It allows the model to maintain context and remember recent information relevant to the current conversation.

Long-Term Memory

Long-term memory in Kami is embodied in the model’s parameters, which are learned during the training process. These parameters encode the vast knowledge acquired from the training data, including vocabulary, grammar rules, factual information, and various language patterns. The model accesses and utilizes this long-term memory to generate coherent and informative responses.

Information Management and Retrieval

Kami manages and retrieves information from its memory through a complex interplay of attention mechanisms and parameter updates.

- Attention Mechanisms: The multi-head self-attention layer allows the model to focus on specific parts of the input sequence, effectively retrieving relevant information from the short-term memory. The attention weights dynamically adjust based on the input and the model’s current state.

- Parameter Updates: During the training process, the model’s parameters are adjusted to minimize prediction errors. These updates refine the model’s long-term memory, allowing it to store and retrieve information more accurately and effectively. As the model encounters new information, its parameters are further adjusted, continuously enhancing its knowledge base.

The combination of short-term and long-term memory, along with the sophisticated attention mechanisms, enables Kami to engage in complex conversations, generate creative text formats, and answer your questions in a comprehensive and informative manner.

Limitations of Kami’s Memory

Kami, while impressive in its ability to generate human-like text, has limitations when it comes to memory. Its memory is not like a human’s, where information is stored and recalled seamlessly. Understanding these limitations is crucial for effectively using Kami and recognizing its potential shortcomings.

Capacity and Retention Duration

Kami’s memory capacity is finite and limited by its training data and model architecture. The model can only retain information for a specific duration, known as the context window. This window is typically limited to a few thousand words or a certain number of tokens.

Beyond this limit, the model starts to “forget” information from earlier parts of the conversation.

Accuracy of Memory

Kami’s memory is not perfect and can be prone to inaccuracies. The model may misinterpret or forget information, especially when dealing with complex or nuanced topics. This is because the model’s memory is based on statistical patterns learned from its training data, and it may not always accurately capture the intended meaning or context of a conversation.

Impact on Task Performance

These memory limitations can significantly impact Kami’s ability to perform tasks that require memory-intensive operations. For example, tasks involving:

- Maintaining a consistent narrative across multiple turns in a conversation.The model may struggle to recall previous points and maintain a coherent storyline, leading to inconsistencies or abrupt shifts in the conversation.

- Following complex instructions or remembering specific details from previous interactions.If a user provides a series of instructions or requests, the model may forget some of them, leading to incomplete or inaccurate results.

- Performing tasks that require reasoning over extended periods of time.For example, tasks involving problem-solving or logical deduction may be challenging for Kami due to its limited memory capacity.

Examples of Memory Limitations

Here are some examples of scenarios where Kami’s memory limitations might be a concern:

- A user asks Kami to write a short story.The model may struggle to maintain a consistent plot and character development throughout the story if it exceeds its context window.

- A user asks Kami to provide information about a specific topic.The model may forget previous information or questions asked about the topic, leading to repetitive or irrelevant responses.

- A user asks Kami to help them with a complex task that requires multiple steps.The model may forget some of the steps, leading to an incomplete or inaccurate solution.

Implications of Kami’s Memory

Kami’s memory capabilities have profound implications for its ability to engage in meaningful conversations and its potential for learning and evolution. The way Kami stores and retrieves information directly impacts its conversational flow, its capacity to learn from past interactions, and its ability to adapt to new situations.

Impact on Conversational Flow

Kami’s memory allows it to maintain context during conversations, enabling a more natural and engaging dialogue. By remembering previous turns in the conversation, it can refer to earlier points, build upon prior information, and avoid repeating itself. This contextual awareness contributes to a more coherent and meaningful interaction, making it feel like a genuine exchange of ideas rather than a series of disconnected responses.

Learning and Adaptation

Kami’s memory plays a crucial role in its learning process. By storing past interactions, it can analyze patterns, identify recurring themes, and learn from feedback. This ability to learn from experience allows Kami to refine its responses, improve its understanding of human language, and adapt to different conversational styles.

As it accumulates more data, it can potentially become more sophisticated and nuanced in its communication.

Addressing Memory Limitations

While Kami’s memory is impressive, it still has limitations. For instance, its memory span is finite, meaning it can only retain a certain amount of information from past conversations. This limitation can hinder its ability to engage in extended dialogues or recall specific details from earlier interactions.

Future advancements in AI could address these limitations by exploring more sophisticated memory architectures, allowing Kami to store and access information more efficiently. This could involve implementing techniques like:

- Long-term memory storage:Integrating a long-term memory component that can store information for extended periods, enabling Kami to recall details from past conversations even after significant time has elapsed.

- Contextual memory management:Developing algorithms that prioritize relevant information from past conversations, allowing Kami to selectively recall specific details that are most pertinent to the current conversation.

- Memory compression techniques:Exploring methods for compressing and storing information more efficiently, enabling Kami to retain a larger volume of data without sacrificing processing speed.

Comparison with Other AI Models

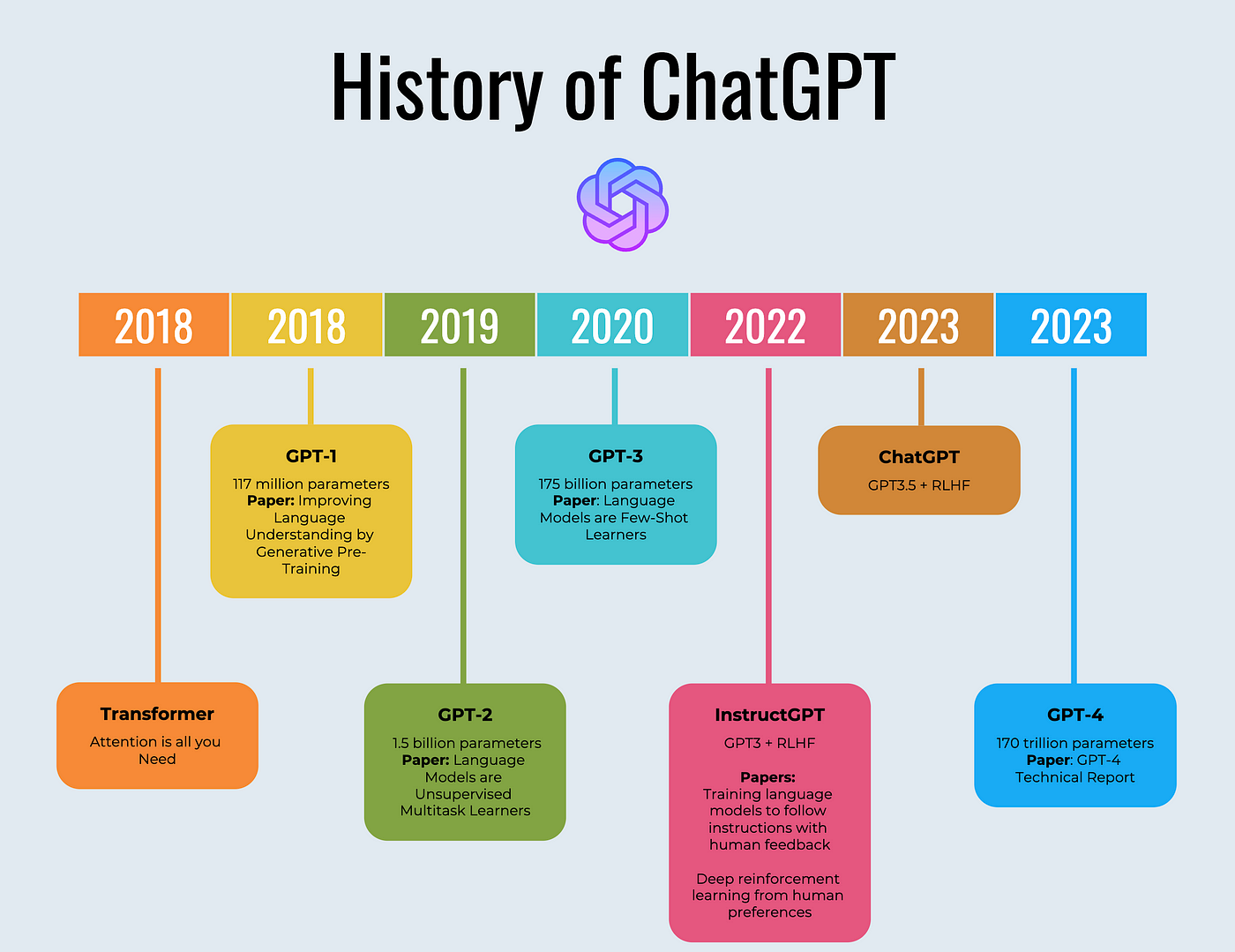

Kami, while impressive, is not the only AI model with memory capabilities. Several other prominent models, like GPT-3 and LaMDA, also exhibit varying degrees of memory functionality. Understanding how their memory capabilities compare can provide insights into their strengths and limitations.

Memory Architecture and Implementation

The memory architecture and implementation differ significantly across AI models, influencing their ability to store and recall information. Kami, based on the Transformer architecture, utilizes a contextual memory mechanism. This means that the model’s memory is implicitly encoded within the hidden states of the network, capturing the context of previous interactions.

In contrast, GPT-3 relies on a similar contextual memory approach, but its larger size and training data allow it to retain more context over longer sequences. LaMDA, developed by Google, employs a more explicit memory system, incorporating a knowledge base alongside its neural network.

This allows LaMDA to access and integrate information from external sources, enhancing its memory capabilities.

Strengths and Weaknesses of Memory Capabilities

- Kami:Kami excels in short-term memory, effectively remembering the context of a conversation within a limited timeframe. Its strength lies in maintaining coherent dialogue and generating responses that align with the ongoing conversation. However, its memory is context-dependent, meaning it may struggle to recall information from previous conversations or unrelated topics.

- GPT-3:GPT-3’s memory is significantly larger than Kami’s, allowing it to retain more information from longer sequences of text. This enables it to generate more comprehensive and contextually relevant responses, even when dealing with complex or multifaceted topics. However, its memory is still primarily contextual, and it may face limitations when attempting to recall information from disparate sources or outside its training data.

- LaMDA:LaMDA’s explicit memory system grants it a distinct advantage. It can access and integrate information from external sources, enhancing its knowledge base and memory capabilities. This allows LaMDA to provide more informative and factually accurate responses, even when dealing with unfamiliar or niche topics.

However, its reliance on external sources can make it vulnerable to inaccuracies or biases present in the underlying data.

Ultimate Conclusion

Understanding the nuances of memory in LLMs like Kami is crucial for comprehending their strengths, limitations, and future development. While these models exhibit impressive memory capabilities, their limitations in capacity, retention, and accuracy highlight the ongoing quest to develop AI systems with more human-like memory functionalities.

The future of AI hinges on overcoming these challenges, paving the way for even more sophisticated and versatile applications.

Q&A

What is the difference between short-term and long-term memory in AI?

Short-term memory refers to the ability to store information temporarily for immediate use, while long-term memory involves storing information for extended periods and retrieving it when needed. These concepts are analogous to human memory, but their implementation in AI systems can vary significantly.

How does Kami’s memory affect its ability to learn and adapt?

Kami’s memory allows it to learn from past interactions and adapt its responses based on accumulated knowledge. This learning process, however, is limited by the model’s memory capacity and the duration of information retention.

Can Kami’s memory be manipulated or influenced?

Yes, Kami’s memory can be influenced by the data it is trained on and the interactions it experiences. This raises concerns about potential biases and manipulation of the model’s output.

Are there any ethical implications associated with Kami’s memory capabilities?

The ability of LLMs like Kami to retain and recall information raises ethical concerns about data privacy, potential misuse of stored data, and the need for responsible AI development.